Mini-AutoDiff: Reverse-Mode Automatic Differentiation

Published:

Technologies: Python, NumPy, Linear Algebra, Graph Algorithms

Description

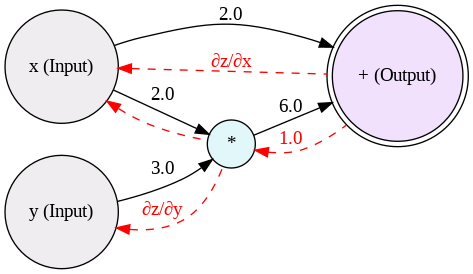

- Core Engine: Developed a computational graph framework from scratch to enable gradient computation via backpropagation (reverse-mode differentiation), mimicking the core functionality of PyTorch’s

autograd. - Operator Implementation: Engineered 11 core operations (including

matmul,solve,logdet, andcholesky) with custom Vector-Jacobian Products (VJPs) to handle gradient flow through complex linear algebra equations. - Advanced Validation: Successfully validated the engine by computing gradients for a Multivariate Gaussian Log-Likelihood model, handling complex derivatives involving matrix inverses and determinants.

Figure: Visualizing the forward pass (black) and backward gradient flow (red) in the custom engine.